SPHiNX

FLEXiBLE TiERiNG

Request a Demo

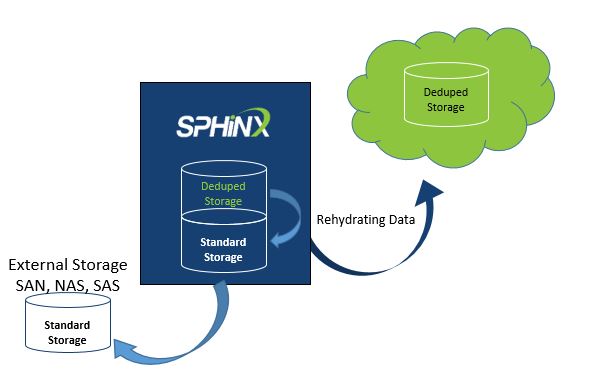

FLEXiBLE TiERiNG is a data management technique that optimizes data distribution across the storage network without slowing down the replication or backup processes. As data is usually stored on various type of media based on availability, recovery requirements, and resources, tiered storage method consist of sorting out data and assigning it to specific folders. Using two-tier or three-tier (in some cases, five-to-six tiers) architecture, data tiering method automatically classifies and manages data, decreasing the costs associated with archiving and migration. By breaking down the storage into tiers, the data is re-structured in a granular way depending on the implementation scenarios and the architecture behind the entire data management process. With FLEXiBLE TiERiNG you can mount data volumes/pools to optimally distribute data across the storage network.

To save on storage, the deduped data should be immediately off-loaded to standard storage; once re-hydrated, data becomes available and ready for the restoring processes. FLEXiBLE TiERiNG seamlessly integrates with any data management environment, any external storage infrastructure, operating system, tape and cloud storage, keeping data available all the time without disruptions of other processes.

Reduce costs, reduce time, and storage space! Create your own solution using your own storage components to connect to your own business infrastructure. Archive inactive data, re-use cold storage, retrieve data whenever you need without process disruptions.

Data growth and data integrity are the two major issues when it comes to storing data for future use. The finite amount of storage the organizations usually have access to limits in most of the cases the backup options and the reliability of the performed backup. SPHiNX deduplication engine provides the best combination to address the challenges related to data storage and data integrity, enabling you to keep storage costs low and systems securely backed up.

Save cost on storage: the data is stored only once even if it’s been subsequently updated and changed Improve backup replication speed by copying only the original data with its pointers. Optimize storage requirements ensuring an efficient use of the infrastructure. Keep the system securely backed up with data classification and storage automation.