SPHiNX

FLEXiBLE DEDUPLICATION

Request a Demo

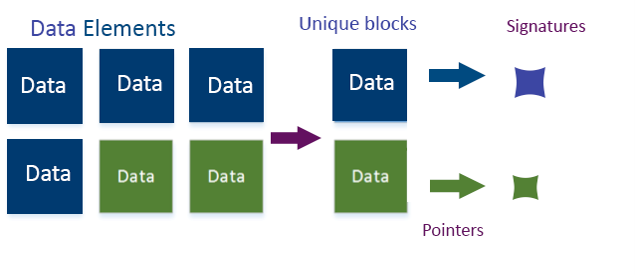

FLEXiBLE deduplication is a revolutionary compression process that optimizes storage usage and replication performance by comparing and storing only one file when the file is found as duplicate. Deduplication is the appliance’s heart that flawlessly streamlines the replication processes. The main data deduplication process consist of storing each unique data sequence only once. By discarding duplicate data elements, the deduplication engine sends only the changed data bits to the storage array. This way, the amount of transmitted data is significantly reduced and the speed of data backups increased. Data deduplication is also used for replication purposes in order to send only the unique blocks from the source to the target and if a block is not unique, it will send only the pointer to the right unique blocks to reduce bandwidth utilization.

Deduplication at the source removes redundant blocks before sending any data to the backup target (client or server). Source-based deduplication reduces bandwidth and storage use and it doesn’t require any additional hardware to deduplicate on. At the target, deduplication is network-based: backups are sent through the network to a remote location. Even if it requires more network bandwidth, the target deduplication is more efficient than source deduplication, particularly for big data sets.

Deduplication process starts at the original storage location. When data is replicated over, it ``arrives`` already deduped to the new storage location. By deduplication, data becomes intelligently compressed and shrunk in such a way that takes only a fraction of the storage space. In essence, it is a simple data compression process that eliminates all redundant copies of data and stores a single dataset. Copies of data blocks are replaced with pointers to the unique copy.

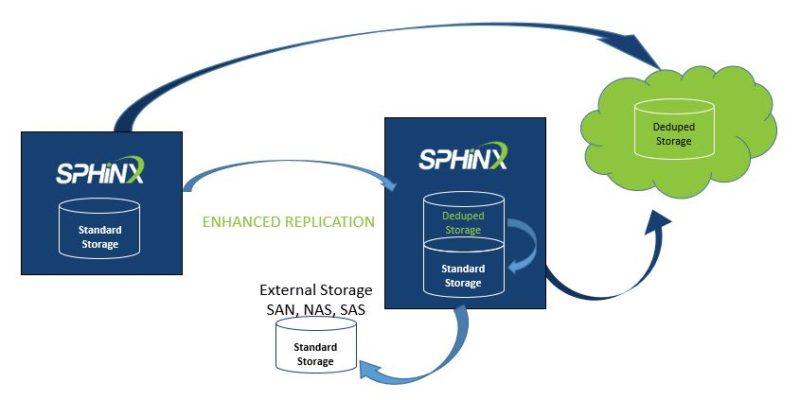

Once replicated, data is stored in a local repository (internal disks) that serves as a transition storage space (deduped storage); from here, data can be off-loaded to external disks (external storage). The local repository is used as a reference point for the entire deduplication process by comparing the files that are about to be backed up or archived with any stored copy of this file. After checking the attributes of the file against an index, the deduplication engine removes any duplicate files and keeps only one copy. If the file is unique, but updated, only the stub that points to the original file is stored.

If the backup target is not a physical storage unit, SPHiNX can back up data to a deduped repository hosted in the cloud. The deduplication process runs the same way as for the local repository, The file is broken into blocks with same fixed size and each block is processed using hash algorithms. Once the block is analyzed, its signature is compared against the deduplication table. Only if the block’s signature is not found in the table, the data is backed up and stored on the disk.